Teachers everywhere are turning to software for detecting AI in student work. The logic seems sound at first because machines do spot patterns faster than people. Yet those same systems often misfire. A single false score can damage trust more than the assignment ever could.

To solve this, teachers must not rely solely on AI detection software. Instead, they should treat these tools as clues and read the essay with the same curiosity they’d bring to a first draft. Sharp transitions, hollow phrases, and arguments that sound too polished are the cues a human reader will always catch better than a machine.

The better solution starts before suspicion. Students who get structured writing guidance rarely feel the need to use AI tools in the first place. That's why platforms like AI essay writer can help them refine essays while still keeping their own voice intact.

Worried Your Writing Looks Generated?

Get real, original content written by professionals.

Order Today

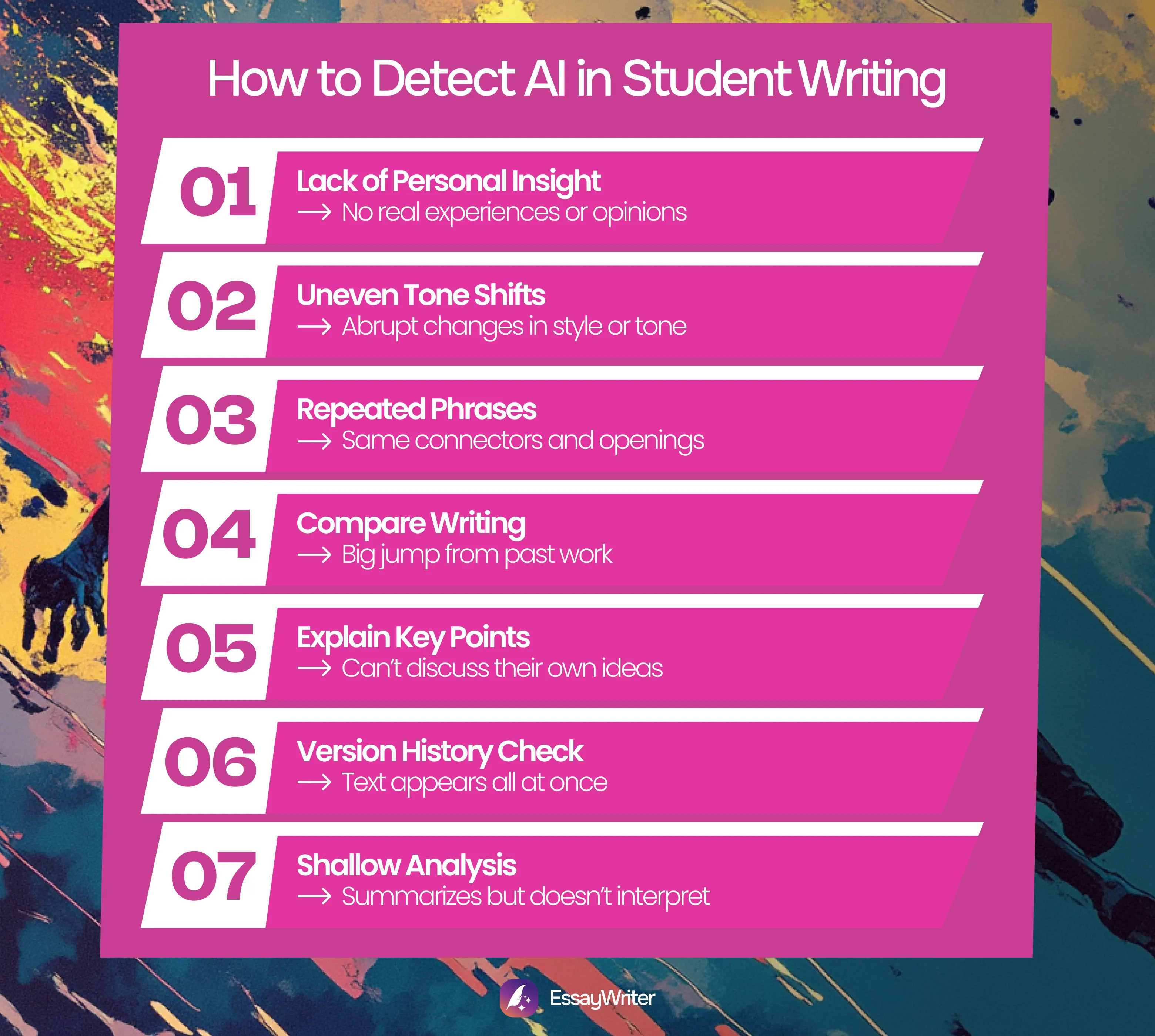

7 Ways on How to Detect AI in Student Writing

AI detectors can calculate word probabilities, but they cannot judge voice or depth. When you rely on them alone, you risk misreading effort as fraud or missing real misuse altogether. The better approach is to combine software checks with your own techniques. Here are a few ways to spot AI-generated content in students’ work:

1. Notice a Lack of Personal Insight

One of the simplest signs of how to tell if a student used AI is the lack of individuality in the writing. When you identify AI-generated content in student work, look first at how the student connects with the topic. Most real essays contain at least one moment of opinion or memory. Students often refer to things they have actually experienced. Those details are messy but personal.

AI tools tend to skip them. Instead of showing perspective, AI tends to produce smooth but generic statements. A student might write about climate change and mention a school recycling project. AI writing tools will mention "environmental awareness in today's world." One shows lived experience. The other repeats common phrasing found online.

When you check for personal touch, note whether the essay feels grounded. Does it include distinct experiences or language that sounds like someone thinking through a problem? If not, you may be looking at AI text. Encourage students to include their viewpoint early.

2. Watch Out for Uneven Tone Shifts

Inconsistency in tone or rhythm can signal AI-generated writing. Students build style slowly, usually over several assignments. Their word choice and tone, even sentence length, evolve in a predictable way. When a new paper suddenly reads like a professional report or sounds unnaturally formal, you should pause.

AI-generated content often jumps between tones within one section. It might start with conversational phrasing, then drift into textbook precision. That sudden switch feels mechanical. Students don't shift tone that sharply without feedback or editing help.

Read one paragraph aloud. If the transitions feel jarring, you might be dealing with AI-generated content. Human writing might include uneven edges, but it still has a steady voice because it's written by a real person. Software, trained on mixed sources, blends different styles.

3. Pay Attention to Repeated Phrases

When you check student work for AI, repetition stands out. Many AI models use template phrases to connect ideas. These phrases fill space without moving the argument forward. Here’s what you should look for:

- The same transition phrases appearing in multiple paragraphs.

- Identical sentence openings like “This shows that” or “It is important to note.”

- Overused connectors that link ideas without adding new information.

- Patterns that sound polished but lack variation in rhythm or tone.

Students sometimes repeat phrases, too, but their repetition has purpose. They might circle back to a main point or use familiar terms from class. AI repetition feels automatic, as if the tool is trying to sound complete.

To test this, underline phrases that occur more than once. Ask whether the repetition actually adds meaning. If every paragraph opens with the same connectors or includes identical phrasing, the text likely came from an AI writing tool. Real students vary their language, even when they make grammar mistakes. That inconsistency shows learning in progress.

4. Compare Writing

One of the most effective strategies to identify AI generated text of student work is comparison. When checking, review earlier student submissions. Their transitions, phrasing, and structure usually reveal their unique writing fingerprint.

If the latest submission suddenly becomes grammatically flawless, uses complex vocabulary, or displays advanced syntax that did not appear before, investigate further. Ask the student to describe how they prepared this draft. Sometimes, students use grammar tools or writing aids responsibly. Other times, the leap in sophistication signals AI use.

Teachers familiar with their students’ voices have a clear advantage. Keep a sample of earlier essays or discussion posts for reference. Even two short paragraphs written in class can provide a baseline. Comparing style side by side often shows differences no algorithm can capture.

5. Ask if They Can Explain Key Points

Sometimes the simplest method still works best, and that is to talk to the student. When you suspect AI involvement, ask the student to explain key points of the paper. Focus on reasoning, not memory. A real writer can expand on ideas without relying on notes. They recall sources, structure, and argument flow naturally.

Students who use AI tools without understanding the content often struggle to answer simple questions. They may repeat phrases from the paper, but cannot explain why they used them. These are a few questions you could ask to tell whether a student did their own writing:

- How they chose their main example or piece of evidence.

- Why they structured the argument in a particular order.

- Whether they can describe what they learned while writing the paper.

A moment of hesitation or confusion can reveal more than any detector score. This method also reinforces accountability. When students know they may need to discuss their writing, they approach the task with greater care. It transforms the process from surveillance to mentorship.

6. Try Version History Check

A simple but often overlooked tool is Google Docs’ Version History. When checking for AI in student writing, this feature reveals the writing process itself. Human writing unfolds gradually. Sentences appear, disappear, and return in revised form. Typos emerge and then vanish. Pauses between edits reflect thinking time.

AI-generated work looks different. It often appears in one upload, with no sign of gradual revision. The entire text lands on the page nearly at once. That pattern contrasts sharply with human drafting behavior.

To review, open the document, select "File," then "Version History." Study the timestamps and edit frequency. A normal pattern includes many small saves and steady changes. If you see one or two large uploads, question how the draft was created. Understanding how do AI checkers work is useful, but watching the process directly provides stronger evidence.

7. Note Shallow Analysis

Depth separates human thinking from generated text. AI can summarize information accurately but often lacks interpretation. Its sentences make sense individually, yet the argument stays flat. When you read an essay that lists facts without connecting them or that avoids drawing conclusions, suspect automation.

Students typically move beyond description. They explain, compare, and evaluate. They cite sources and add commentary. AI stops at restating. For example, in a history paper, a student might explain how one event led to another. An AI version might simply describe both events without linking them.

To check for depth, ask yourself three things:

- Does the essay show cause and effect?

- Does it include original analysis or synthesis?

- Does it show real understanding of the material?

Wrapping Things Up: Spotting AI-Generated Content

Automation can scan patterns, but it cannot feel intention. Teachers still read tone, rhythm, and depth more accurately than any algorithm. The real test lies in patience, not software. Learning how to humanize AI-generated text also matters because it teaches students to rebuild their voice instead of hiding behind a machine's.

EssayWriter helps with that step. The platform offers structured guidance, editing support, and one-on-one academic help. Students who learn through EssayWriter gain control over their writing process and confidence in their own words. They become less dependent on AI tools and more capable of producing authentic work that sounds like them.

FAQ

What Is The Best Way To Detect AI In Student Writing?

Start by trusting your eye before the algorithm. Compare a student’s new paper with their earlier work. Notice tone changes, rhythm shifts, or sentences that sound too even. Use AI detectors as support, not as proof. Genuine consistency across drafts tells you far more than a percentage score ever could.

How Do Teachers Know When Students Are Using AI?

Teachers notice the breaks in voice. A sudden leap in polish or the disappearance of small mistakes usually signals something off. Ask the student to explain how they built the argument or chose their examples. Writers who did the work themselves recall their choices naturally; those who didn’t often hesitate.

How Do Schools Detect AI In Writing?

Most schools use a mix of tools and professional judgment. AI detectors flag potential cases, but human review confirms them. Checking version history, comparing multiple drafts, and holding brief discussions helps reduce false positives and keeps evaluations fair.

How To Prove A Student Used ChatGPT?

Proof requires evidence, not assumptions. Keep earlier writing samples for reference and look for clear differences in phrasing, structure, or syntax. An AI detector can support your findings, but conversation matters most. Talk with the student to understand how the paper came together before deciding on next steps.

How To Know If An Essay Was Written By AI?

AI-written essays often sound polished but hollow. They summarize without reflecting, stay rhythmically flat, and lack the small imperfections that show thinking. Compare the piece with the student’s usual tone or ask them to write a short paragraph in class. Authentic writing always carries a trace of the writer behind it.

- Montclair State University. (2018). https://www.montclair.edu/. https://www.montclair.edu/faculty-excellence/ofe-teaching-principles/clear-course-design/practical-responses-to-chat-gpt/red-flags-detecting-ai-writing/

- Collett, D. (2023, July 27). Advice for students regarding Turnitin and AI writing detection. Academic Integrity. https://academicintegrity.unimelb.edu.au/plagiarism-and-collusion/artificial-intelligence-tools-and-technologies/advice-for-students-regarding-turnitin-and-ai-writing-detection

- The University of Sydney. (2023). Artificial intelligence. The University of Sydney. https://www.sydney.edu.au/students/academic-integrity/artificial-intelligence.html

Recommended articles